LLM Bootcamp - Spring 2023

What are the pre-requisites for this bootcamp?

Our goal is to get you 100% caught up to state-of-the-art and ready to build and deploy LLM apps, no matter what your level of experience with machine learning is.

Please enjoy, and email us, tweet us, or post in our Discord if you have any questions or feedback!

Lectures

Learn to Spell: Prompt Engineering

- High-level intuitions for prompting

- Tips and tricks for effective prompting: decomposition/chain-of-thought, self-criticism, ensembling

- Gotchas: "few-shot learning" and tokenization

LLMOps

- Comparing and evaluating open source and proprietary models

- Iteration and prompt management

- Applying test-driven-development and continuous integration to LLMs

UX for Language User Interfaces

- General principles for user-centered design

- Emerging patterns in UX design for LUIs

- UX case studies: GitHub Copilot and Bing Chat

Augmented Language Models

- Augmenting language model inputs with external knowledge

- Vector indices and embedding management systems

- Augmenting language model outputs with external tools

Launch an LLM App in One Hour

- Why is now the right time to build?

- Techniques and tools for the tinkering and discovery phase: ChatGPT, LangChain, Colab

- A simple stack for quickly launching augmented LLM applications

LLM Foundations

- Speed-run of ML fundamentals

- The Transformer architecture

- Notable LLMs and their datasets

Project Walkthrough: askFSDL

- Walkthrough of a GitHub repo for sourced Q&A with LLMs

- Try it out via a bot in our Discord

- Python project tooling, ETL/data processing, deployment on Modal, and monitoring with Gantry

What's Next?

- Can we build general purpose robots using multimodal models?

- Will models get bigger or smaller? Are we running out of data?

- How close are we to AGI? Can we make it safe?

Invited Talks

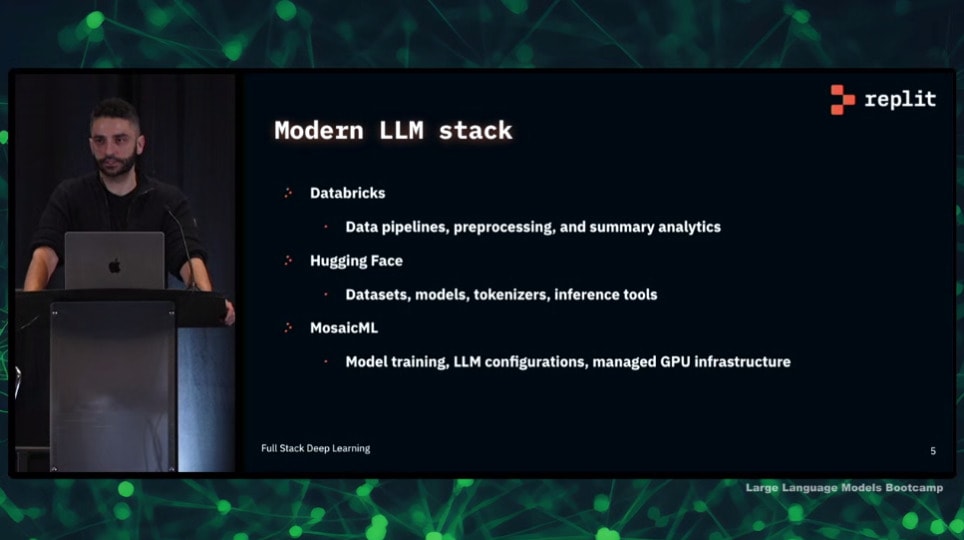

Reza Shabani: How To Train Your Own LLM

- The "Modern LLM Stack": Databricks, Hugging Face, MosaicML, and more

- The importance of knowing your data and designing preprocessing carefully

- The features of a good LLM engineer

- By Reza Shabani, who trained Replit's code completion model, Ghostwriter.

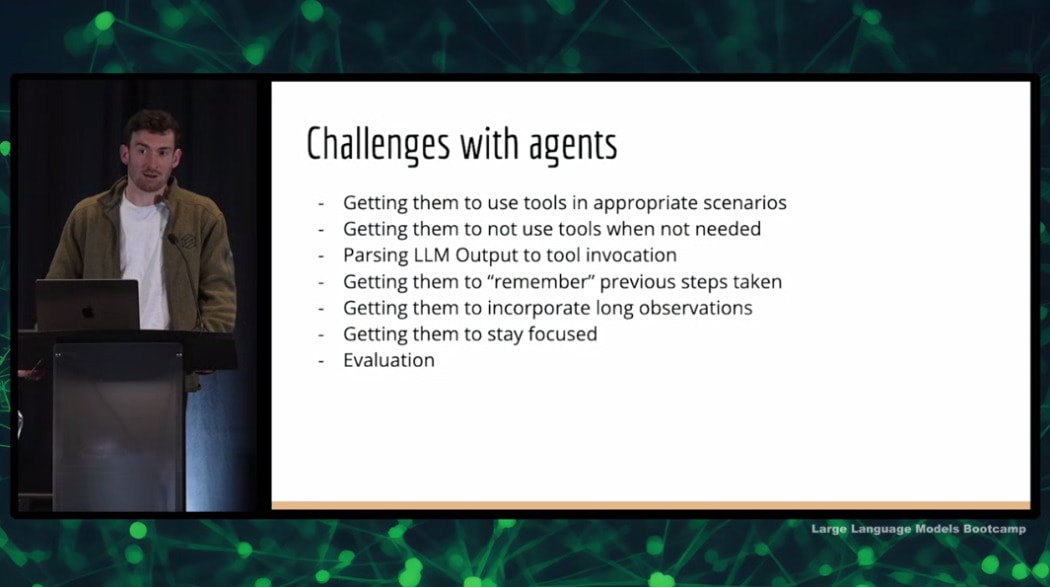

Harrison Chase: Agents

- The "agent" design pattern: tool use, memory, reflection, and goals

- Challenges facing agents in production: controlling tool use, parsing outputs, handling large contexts, and more

- Exciting research projects with agents: AutoGPT, BabyAGI, CAMEL, and Generative Agents

- By Harrison Chase, co-creator of LangChain

Fireside Chat with Peter Welinder

- With Peter Welinder, VP of Product & Partnerships at OpenAI

- How OpenAI converged on LLMs

- Learnings and surprises from releasing ChatGPT

Sponsors

We are deeply grateful to all of the sponsors who helped make this event happen.

Direct Sponsors

Compute Credit Sponsors

We are excited to share this course with you for free.

We have more upcoming great content. Subscribe to stay up to date as we release it.

We take your privacy and attention very seriously and will never spam you. I am already a subscriber